As shown in the diagram below, let's assume that a customer has three sites. R1 represents the hub, R2 and R3 are the remote spokes. Each site has a local internet connection. Our aim to provide connectivity between the LAN subnets of all three sites.

The IP addresses 1.1.1.1/30, 2.2.2.1/30 and 3.3.3.1/30 are the public IPs provided by the local ISPs at respective locations. The 192.168.X.0/24 is the LAN IP subnet at each site.

We can configure standard site to site IPSEC tunnels between hub and each spoke and implement GRE to run routing protocol between them. In our scenario, there will be two static tunnels however what if there are 100s of spokes? We will then have to manually configure 100s of GRE tunnels!!

One of the solution is to use mGRE instead of GRE. Both GRE and mGRE provide support for unicast, multicast and broadcast.

To utilise mGRE we will use DMVPN technology which allows you to create single mGRE tunnel along with a single IPSEC profile.

Here are the components of DMVPN:-

- mGRE (multipoint GRE)

- NHRP (Next hop resolution protocol)

- Routing Protocol (dynamic/static)

- IPSEC (optional - provides protection over the internet)

DMVPN can be configured in three different ways. They are generally called Phase 1, Phase 2 and Phase 3.

In this post we will see how Phase 1 works. Phase 1 is a hub & spoke deployment model in which spoke to spoke traffic always traverse through the hub.

Let's start doing the config. We will configure the Hub (R1) first.

We are going to create a tunnel interface and assign an IP address. By default the tunnel mode is GRE. We will change it to mGRE and set the tunnel source as the interface fast0/0 (WAN interface).

One thing to notice that we are not going to configure the tunnel destination as it's a multipoint GRE tunnel.

Now we will configure the NHRP parameters. NHRP maps tunnel IP address to NMBA ip address (WAN public IP) statically or dynamically.

The NHRP network ID do not have to match on the hub and relevant spokes however there is no reason why shouldn't do that.

To run dynamic routing protocol over DMVPN, we have to enable multicast capability.

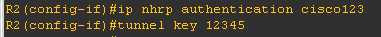

We can also configure optional nhrp authenticaion and tunnel key for security. These parameters must match on the relevant spokes. However it's an optional configuration.

Let's configure the spoke R2. In phase-1, the tunnel on the spoke will be point-to-point GRE so we will configure the tunnel interface, IP address, tunnel source and destination. The tunnel destination will be the public IP of R1.

Now we will configure the NHRP parameters. We will configure the nhrp network id (same value as on the hub)

We will now map R1's NBMA (public IP) to the tunnel IP (private IP) address.

If we want to run a dynamic routing protocol over DMVPN, we will have to specify the NBMA (public) IP address which should received the multicast/broadcast packets. In our case, this would be R1's public IP (1.1.1.1).

We will also have to specify the IP address of the NHRP server. This will be the tunnel IP address of R1.

We can now complete the configuration by specifying NHRP authentication and tunnel key.

We will apply a similar config on the spoke R3

If we look at R1, we can notice that there are two NHRP entries

The idea behind NHRP is that each spoke registers it's NBMA (public IP) and tunnel IP (private IP) with DMVPN hub when it boots up and queries the NHRP database for the addresses of other spokes.

We can see that R1 knows about the public IP (NBMA) and related tunnel IP information about R2 and R3.

On the spoke, we can see that it shows it's own NHRP mapping for the hub. It also shows that the NHRP server is responding.

Now we will run EIGRP on hub and both the spokes. We will advertise the tunnel IPs and the relevant loopbacks through EIGRP.

Configs on R1, R2 and R3

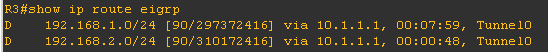

After applying these configs, we can see that R1 has started receiving the loopback prefixes from R2 and R3.

However, R2 will not receive R3's loopback and vice versa due to the EIGRP's split horizon rule.

We will have to disable split horizon on R1 which will enable R2 and R3 to receive the loopback prefixes.

Let's verify the connectivity from R2's loopback to R3's loopback

The traceroute confirm that the packets are traversing through the hub R1.

Even if we apply "no ip next-hop-self eigrp 10" under tunnel interface on R1, the spokes will still talk to each other via the hub R1 as we have configured point-to-point tunnels on the spokes.

Due to this limitation, phase 1 is not widely used in real world designs. The DMVPN phase 2 resolves this problem and let us have spoke to spoke dynamic tunnels. We will cover phase 2 in our next post.

Generally DMVPN is implemented over the public Internet hence we have to apply the IPSEC profile to secure the communication. We haven't covered the IPSEC configuration in this post as it's very straight forward.

The IP addresses 1.1.1.1/30, 2.2.2.1/30 and 3.3.3.1/30 are the public IPs provided by the local ISPs at respective locations. The 192.168.X.0/24 is the LAN IP subnet at each site.

We can configure standard site to site IPSEC tunnels between hub and each spoke and implement GRE to run routing protocol between them. In our scenario, there will be two static tunnels however what if there are 100s of spokes? We will then have to manually configure 100s of GRE tunnels!!

One of the solution is to use mGRE instead of GRE. Both GRE and mGRE provide support for unicast, multicast and broadcast.

To utilise mGRE we will use DMVPN technology which allows you to create single mGRE tunnel along with a single IPSEC profile.

Here are the components of DMVPN:-

- mGRE (multipoint GRE)

- NHRP (Next hop resolution protocol)

- Routing Protocol (dynamic/static)

- IPSEC (optional - provides protection over the internet)

DMVPN can be configured in three different ways. They are generally called Phase 1, Phase 2 and Phase 3.

In this post we will see how Phase 1 works. Phase 1 is a hub & spoke deployment model in which spoke to spoke traffic always traverse through the hub.

Let's start doing the config. We will configure the Hub (R1) first.

We are going to create a tunnel interface and assign an IP address. By default the tunnel mode is GRE. We will change it to mGRE and set the tunnel source as the interface fast0/0 (WAN interface).

One thing to notice that we are not going to configure the tunnel destination as it's a multipoint GRE tunnel.

Now we will configure the NHRP parameters. NHRP maps tunnel IP address to NMBA ip address (WAN public IP) statically or dynamically.

The NHRP network ID do not have to match on the hub and relevant spokes however there is no reason why shouldn't do that.

To run dynamic routing protocol over DMVPN, we have to enable multicast capability.

We can also configure optional nhrp authenticaion and tunnel key for security. These parameters must match on the relevant spokes. However it's an optional configuration.

Let's configure the spoke R2. In phase-1, the tunnel on the spoke will be point-to-point GRE so we will configure the tunnel interface, IP address, tunnel source and destination. The tunnel destination will be the public IP of R1.

Now we will configure the NHRP parameters. We will configure the nhrp network id (same value as on the hub)

We will now map R1's NBMA (public IP) to the tunnel IP (private IP) address.

If we want to run a dynamic routing protocol over DMVPN, we will have to specify the NBMA (public) IP address which should received the multicast/broadcast packets. In our case, this would be R1's public IP (1.1.1.1).

We will also have to specify the IP address of the NHRP server. This will be the tunnel IP address of R1.

We can now complete the configuration by specifying NHRP authentication and tunnel key.

We will apply a similar config on the spoke R3

The idea behind NHRP is that each spoke registers it's NBMA (public IP) and tunnel IP (private IP) with DMVPN hub when it boots up and queries the NHRP database for the addresses of other spokes.

We can see that R1 knows about the public IP (NBMA) and related tunnel IP information about R2 and R3.

On the spoke, we can see that it shows it's own NHRP mapping for the hub. It also shows that the NHRP server is responding.

Now we will run EIGRP on hub and both the spokes. We will advertise the tunnel IPs and the relevant loopbacks through EIGRP.

Configs on R1, R2 and R3

After applying these configs, we can see that R1 has started receiving the loopback prefixes from R2 and R3.

However, R2 will not receive R3's loopback and vice versa due to the EIGRP's split horizon rule.

We will have to disable split horizon on R1 which will enable R2 and R3 to receive the loopback prefixes.

Let's verify the connectivity from R2's loopback to R3's loopback

The traceroute confirm that the packets are traversing through the hub R1.

Even if we apply "no ip next-hop-self eigrp 10" under tunnel interface on R1, the spokes will still talk to each other via the hub R1 as we have configured point-to-point tunnels on the spokes.

Due to this limitation, phase 1 is not widely used in real world designs. The DMVPN phase 2 resolves this problem and let us have spoke to spoke dynamic tunnels. We will cover phase 2 in our next post.

Generally DMVPN is implemented over the public Internet hence we have to apply the IPSEC profile to secure the communication. We haven't covered the IPSEC configuration in this post as it's very straight forward.

Excellent post. loved it. Thank you. If I now read theory, it would make more sense. Will wait for next post of Phase 2

ReplyDeleteVery good explained.

ReplyDelete